Selected Publications

-

Visual Recognition with Deep Nearest Centriods [pdf(arxiv)][code]. W. Wang*†, C. Han*, T. Zhou*, D. Liu†, International Conference on Learning Representations (ICLR), 2023 (Spotlight). *Equal Contribution

-

Prompt Learns Prompt: Exploring Knowledge-Aware Generative Prompt Collaboration For Video Captioning [pdf(IJCAI)]. L. Yan*, C. Han*, Z. Xu, D. Liu†, Q. Wang, International Joint Conferences on Artificial Intelligence (IJCAI), 2023. *Equal Contribution

-

E^2VPT: An Effective and Efficient Approach for Visual Prompt Tuning [pdf(arxiv)][code]. C. Han*, Q. Wang, Y. Cui, Z. Cao, W. Wang, S. Qi, D. Liu†. International Conference on Computer Vision (ICCV), 2023.

-

Unified 3D Segmenter As Prototypical Classifiers [pdf(Openreview)][code]. Z. Qin*, C. Han*, X. Lu, Q. Wang, X, Nie, Y, Yin†. Conference on Neural Information Processing Systems (NeurIPS), 2023. *Equal Contribution

-

Facing the Elephant in the Room: Visual Prompt Tuning or Full Finetuning? C. Han*, Q. Wang, Y. Cui, W. Wang, L. Huang, S. Qi, D. Liu†. International Conference on Learning Representations (ICLR), 2024 [pdf(Openreview)][code].

-

Image Translation as Diffusion Visual Programmers. C. Han, J. C. Liang, Q. Wang, M. Rabbani, S. Dianat, R. Rao, Y. N. Wu, D. Liu†. International Conference on Learning Representations (ICLR), 2024. [pdf(Openreview)][Website].

-

ProMotion: Prototypes As Motion Learners. Y. Lu, D. Liu, Q., C. Han, Y. Cui, Z. Cao, X. Zhang, Y. V. Chen, H. Fan†. IEEE / CVF Computer Vision and Pattern Recognition Conference (CVPR), 2024. [pdf]

-

Prototypical Transformer as Unified Motion Learners. C. Han*, Y. Lu*, G. Sun, J. C. Liang, Z. Cao, Q. Wang, Q. Guan, S. A. Dianat, R. M. Rao, T. Geng, Z. Tao†, D. Liu†. International Conference on Machine Learning (ICML), 2024. [pdf]

-

Self-supervised Adversarial Training of Monocular Depth Estimation against Physical-World Attacks. Z. Cheng, C. Han, J. C. Liang, Q. Wang, X. Zhang, D. Liu†. IEEE Transactions on Pattern Analysis and Machine Intelligence (IEEE TPAMI), 2024. [pdf]

-

Optical Flow as Spatial-Temporal Attention Learners. Y. Lu*, C. Han*, Q. Wang, H. Fan, Z. Kong, D. Liu, Y. Chen. IEEE Transactions on Pattern Analysis and Machine Intelligence (IEEE TPAMI), 2024.

-

AMD: Automatic Multi-step Distillation of Large-scale Vision Models. C. Han, Q. Wang, S. A Dianat, M. Rabbani, R. M Rao, Y. Fang, Q. Guan, L. Huang, D. Liu†. The European Conference on Computer Vision (ECCV), 2024. [pdf]

-

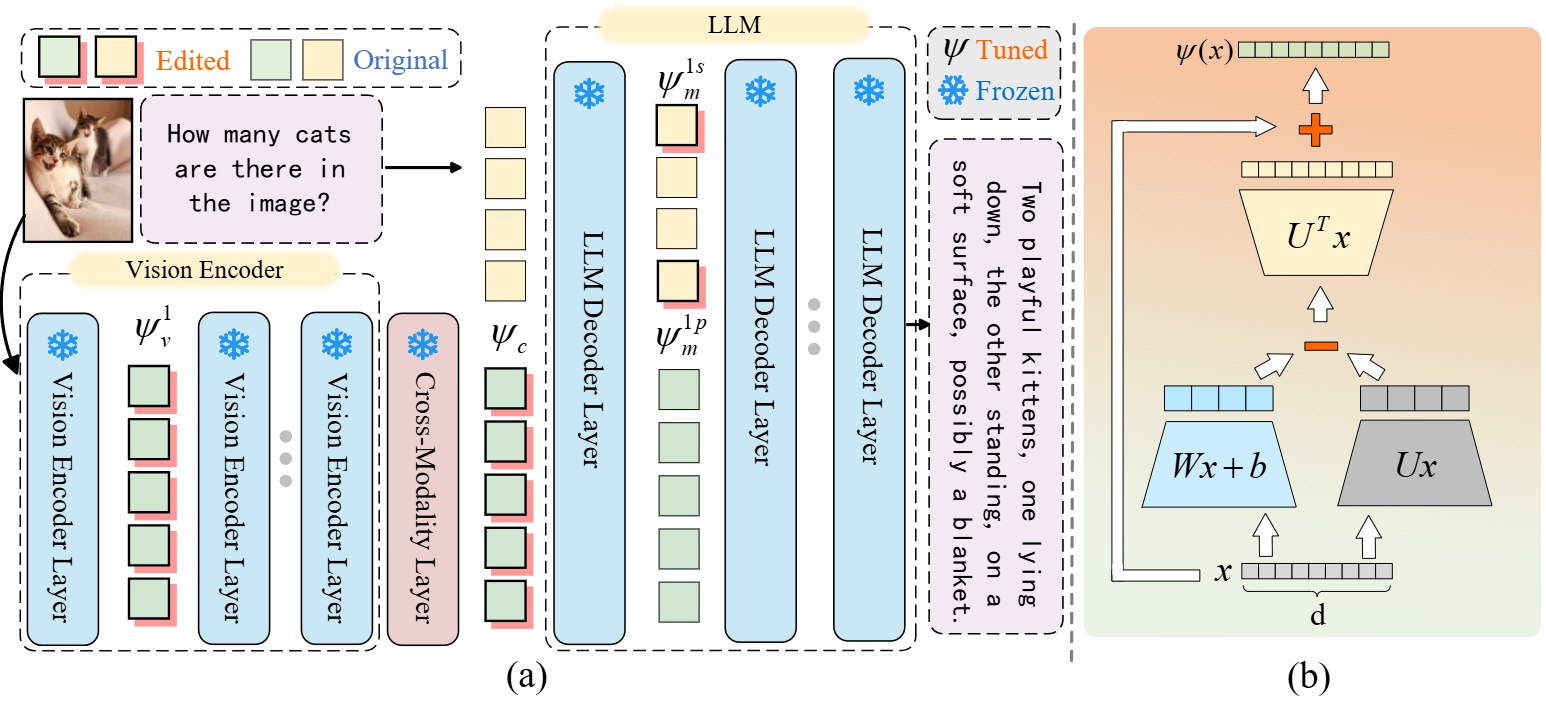

M^2PT: Multimodal Prompt Tuning for Zero-shot Instruction Learning. T. Wang, Y. Liu, J. C. Liang, J. Zhao, Y. Cui, Y. Mao, S. Nie, J. Liu, F. Feng, Z. Xu, C. Han, L. Huang, Q. Wang, D. Liu. The Conference on Empirical Methods in Natural Language Processing (EMNLP), 2024. [pdf]

-

Visual Fourier Prompt Tuning. R. Zeng*, C. Han*, Q Wang, C. Wu, T. Geng, L. Rao, L. Huang, Y. N. Wu, D. Liu. The Conference on Neural Information Processing Systems (NeurIPS), 2024. [pdf]

-

Re-Imagining Multimodal Instruction Tuning: A Representation View. Y. Liu, J. C. Liang, R. Tang, Y. Lee, M. Rabbani, S. Dianat, R. Rao, L. Huang, D. Liu, Q. Wang, C. Han†. The International Conference on Learning Representations (ICLR), 2025. [pdf]

-

Exploring the Adversarial Vulnerabilities of Vision-Language-Action Models in Robotics. T. Wang*, C. Han*, J. C. Liang*, W. Yang, D. Liu, L. X. Zhang, Q. Wang, J. Luo, R. Tang. International Conference on Computer Vision (ICCV), 2025. [pdf][code]

-

MEPT: Mixture of Expert Prompt Tuning as a Manifold Mapper. R. Zeng*, G. Sun*, Q Wang, T. Geng, S. A Dianat, X. Han, R. Rao, X. Zhang, C. Han, L. Huang, D. Liu. The Conference on Empirical Methods in Natural Language Processing (EMNLP), 2025.

-

Probabilistic Token Alignment for Large Language Model Fusion. R. Zeng, J. C. Liang, C. Han, Z. Cao, J. Liu, X. Quan, Q. Wang, T. Geng, D. Liu. The Conference on Neural Information Processing Systems (NeurIPS), 2025.

-

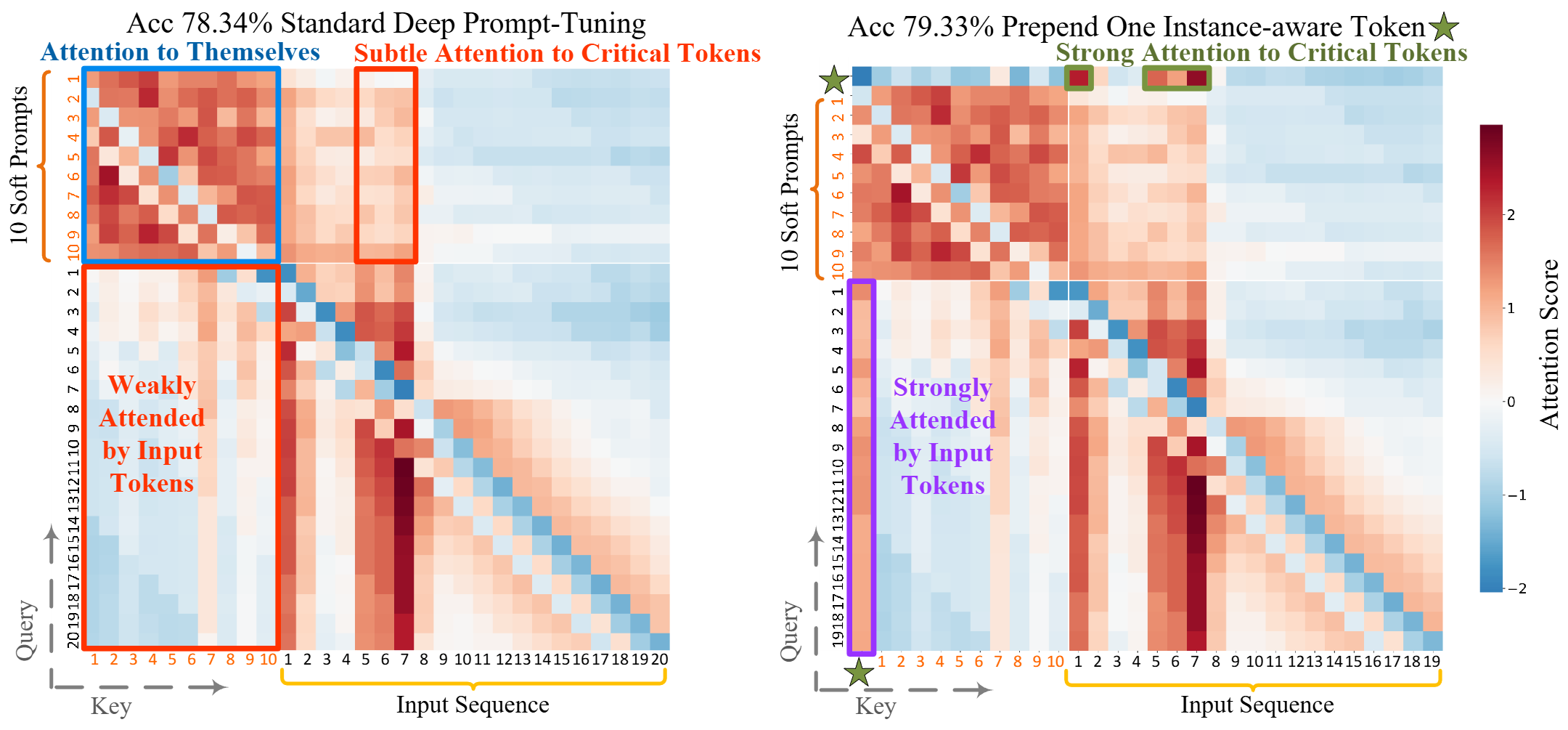

All You Need is One: Capsule Prompt Tuning with a Single Vector. Y. Liu, J. C. Liang, H. Fang, W. Yang, Y. Cui, X. Han, L. Huang, D. Liu, Q. Wang, C. Han. The Conference on Neural Information Processing Systems (NeurIPS), 2025.